360 camera for industrial inspection with ROS and Robosynthesis robots

Together with Robosynthesis we’ve been working on developing and integrating a 360 camera module for our ROS based industrial inspection robots. I was so pleased with the results that I couldn’t pass on the opportunity to share what we’ve been up to.

Background

In one of the projects we have worked on we run into an issue - teleoperating a robot in an environment filled with obstacles was really difficult with the cameras we had at the time. In that particular project we ended up with the following camera setup:

- One camera was pointed forward

- Two cameras were pointing to the sides

- Fourth camera was attached at the top of the robot and looking down, allowing the operator to get a good view of what’s next to the robot’s wheels

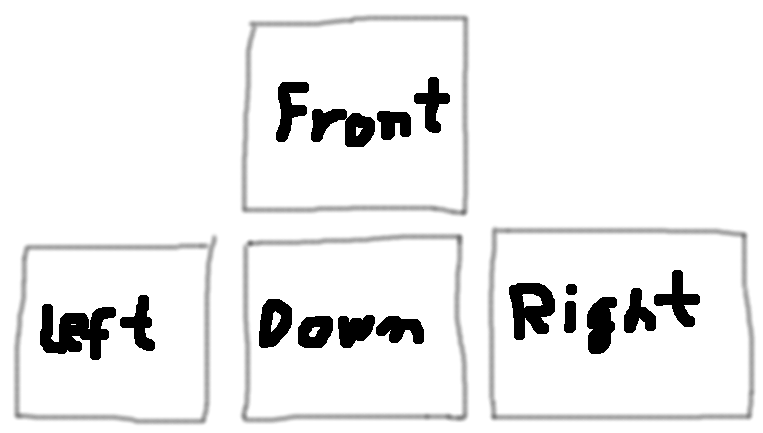

The user interface for this setup looked something like this:

Each of the above boxes was providing the user with the camera view. This worked well enough but wasn’t very intuitive. Months after that we’ve started exploring what we’ve called a helicopter 360 view.

We have managed to come with the solution that allowed rectifying images from four cameras simultaneously. The idea at the time was that if we get a high enough field of view we should be able to create a nice top down projection of the environment around the robot. There is definitely a value in a top-down projection around the robot but why constrain yourself to 2D view when you can go full 360?

360 camera ROS module in action

Before we jump into the technical details of the solution let me show you how it works:

In this video I’m able to use my mouse to look around the 360 sphere that has the camera feeds overlayed. The interface is very intuitive - you just click and drag the mouse to look at the environment and use the scroll wheel to zoom in or out. There are couple of things I like about this solution:

- Coverage - compared to the alternative solutions we are looking everywhere around the robot at the same time. This is great for inspection because we can record the data and play it back later on allowing us to focus on many points of interest

- Ease of use - this solution is way more intuitive to the operator than our previous 4 separate camera views

- Manageable latency - you can actually operate a robot using this view as the only camera feed

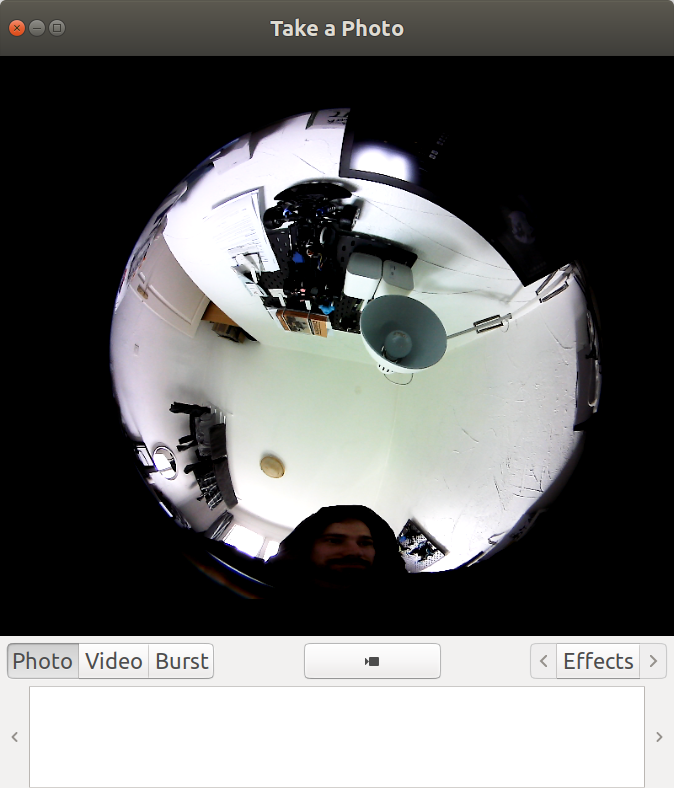

The first thing to get to our solution was to get USB cameras with a high field of view. We ended up with 220 degrees FoV USB cameras. The first test was getting the image feed from a single camera:

Then we entered a very rapid prototyping stage with this handy prototype:

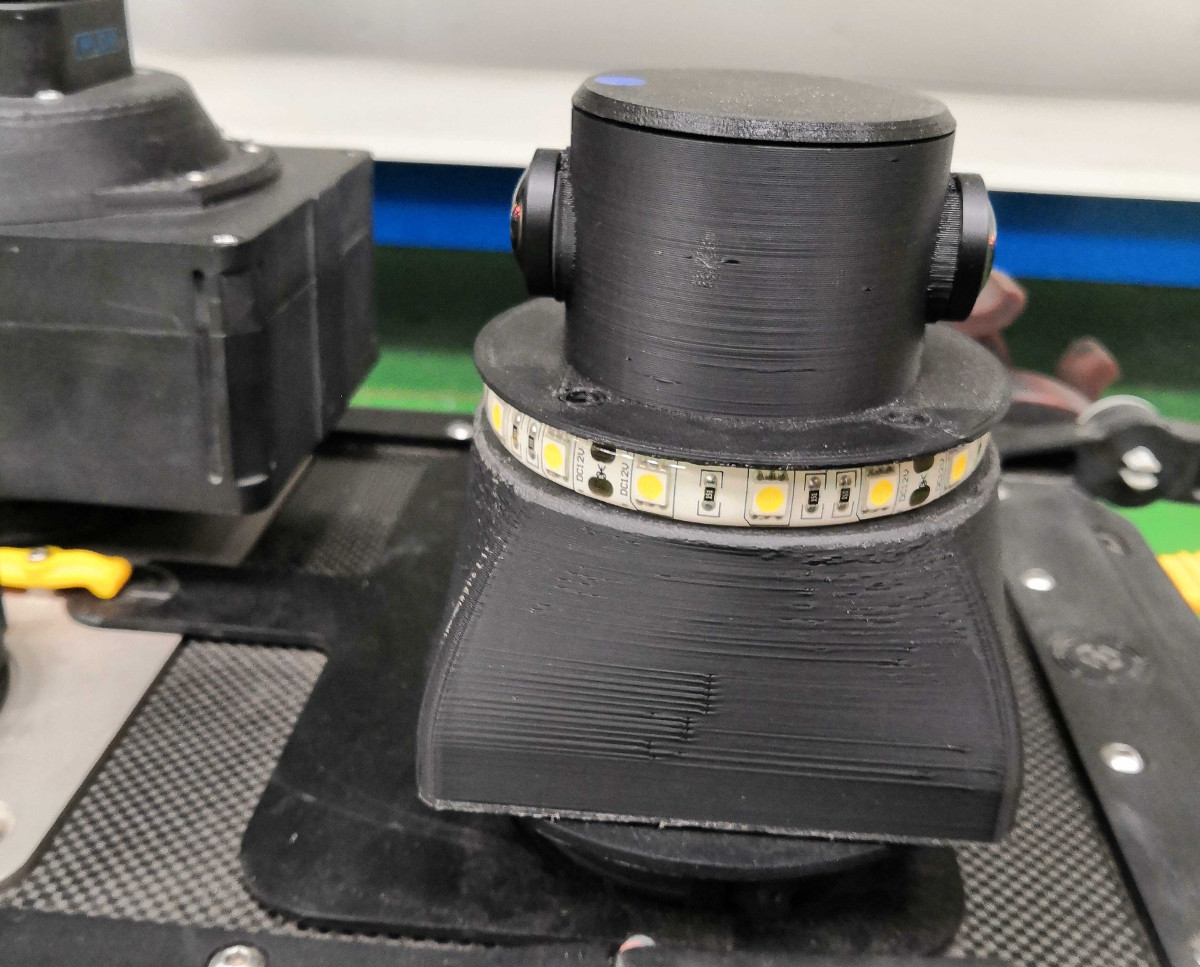

This first prototype allowed me to quickly prove the concept and prepare and test the software stack. In less than a week from the first proof of concept the team took it to the next level:

In this short period of time we have:

- Designed the casing

- Added a flood light that can be triggered with a ROS service call

- Made it into a module that can be plugged in anywhere on the robot’s deck

Technical details

I hope that at this point you are wondering how we make this 360 view. The core piece of software that we’ve used is the RVIZ Textured Sphere open source plugin developed by Researchers from the Nuclear and Applied Robotics Group at the University of Texas. The plugin takes the image feed from two sources and then applies them onto a 3D Sphere.

Since in the first prototype we have used USB cameras we have used standard UVC drivers for ROS. Unfortunately the cameras we used were suboptimal, providing a rectangular image for a circular image view. Because of that we had to post process the images to be square and the fisheye view to appear in the center.

Since we want the 360 camera view to be used by the operator, the hard requirement for us is a latency below 1 second. In this first prototype we saw around 500ms of latency with the 800x600px resolution for a single camera. In the next iteration I’d like to drive the latency down below 300ms while increasing the camera resolution. Stay tuned for the future updates!

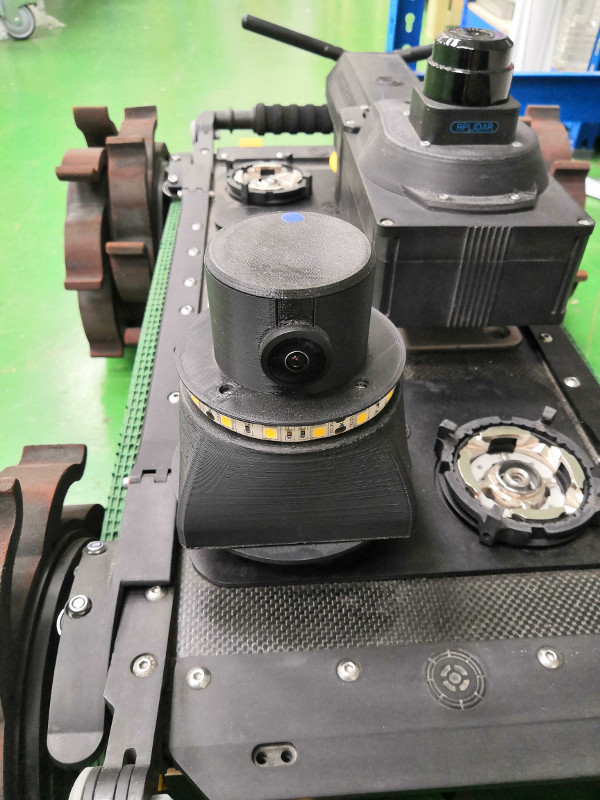

As a bonus please find the below picture showing two Robosynthesis robots with heaps of modules being ready for industrial inspection.